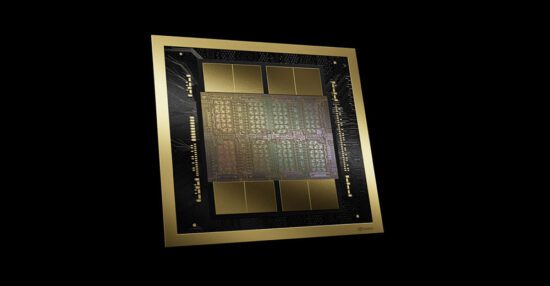

Nvidia is pumping up the ability in its line of synthetic intelligence chips with the announcement Monday of its Blackwell GPU structure at its first in-person GPU Know-how Convention (GTC) in 5 years.

Based on Nvidia, the chip, designed to be used in massive information facilities — the sort that energy the likes of AWS, Azure, and Google — presents 20 PetaFLOPS of AI efficiency which is 4x sooner on AI-training workloads, 30x sooner on AI-inferencing workloads and as much as 25x extra energy environment friendly than its predecessor.

In comparison with its predecessor, the H100 “Hopper,” the B200 Blackwell is each extra highly effective and power environment friendly, Nvidia maintained. To coach an AI mannequin the scale of GPT-4, for instance, would take 8,000 H100 chips and 15 megawatts of energy. That very same process would take solely 2,000 B200 chips and 4 megawatts of energy.

“That is the corporate’s first large advance in chip design for the reason that debut of the Hopper structure two years in the past,” Bob O’Donnell, founder and chief analyst of Technalysis Analysis, wrote in his weekly LinkedIn publication.

Repackaging Train

Nevertheless, Sebastien Jean, CTO of Phison Electronics, a Taiwanese electronics firm, known as the chip “a repackaging train.”

“It’s good, however it’s not groundbreaking,” he instructed TechNewsWorld. “It should run sooner, use much less energy, and permit extra compute right into a smaller space, however from a technologist perspective, they only squished it smaller with out actually altering something elementary.”

“That implies that their outcomes are simply replicated by their opponents,” he mentioned. “Although there’s worth in being first as a result of whereas your competitors catches up, you progress on to the subsequent factor.”

“Once you drive your competitors right into a everlasting catch-up sport, except they’ve very sturdy management, they’ll fall right into a ‘quick follower’ mentality with out realizing it,” he mentioned.

“By being aggressive and being first,” he continued, “Nvidia can cement the concept they’re the one true innovators, which drives additional demand for his or her merchandise.

Though Blackwell could also be a repackaging train, he added, it has an actual web profit. “In sensible phrases, individuals utilizing Blackwell will be capable of do extra compute sooner for a similar energy and house finances,” he famous. “That may enable options based mostly on Blackwell to outpace and outperform their competitors.”

Plug-Appropriate With Previous

O’Donnell asserted that the Blackwell structure’s second-generation transformer engine is a major development as a result of it reduces AI floating level calculations to 4 bits from eight bits. “Virtually talking, by lowering these calculations down from 8-bit on earlier generations, they’ll double the compute efficiency and mannequin sizes they’ll assist on Blackwell with this single change,” he mentioned.

The brand new chips are additionally suitable with their predecessors. “If you have already got Nvidia’s techniques with the H100, Blackwell is plug-compatible,” noticed Jack E. Gold, founder and principal analyst with J.Gold Associates, an IT advisory firm in Northborough, Mass.

“In principle, you possibly can simply unplug the H100s and plug the Blackwells in,” he instructed TechNewsWorld. “Though you are able to do that theoretically, you won’t be capable of try this financially.” For instance, Nvidia’s H100 chip prices $30,000 to $40,000 every. Though Nvidia didn’t reveal the worth of its new AI chip line, pricing will in all probability be alongside these strains.

Gold added that the Blackwell chips may assist builders produce higher AI functions. “The extra information factors you may analyze, the higher the AI will get,” he defined. “What Nvidia is speaking about with Blackwell is as a substitute of with the ability to analyze billions of knowledge factors, you may analyze trillions.”

Additionally introduced on the GTC have been Nvidia Inference Microservices (NIM). “NIM instruments are constructed on prime of Nvidia’s CUDA platform and can allow companies to deliver customized functions and pretrained AI fashions into manufacturing environments, which ought to support these corporations in bringing new AI merchandise to market,” Brian Colello, an fairness strategist with Morningstar Analysis Providers, in Chicago, wrote in an analyst’s observe Tuesday.

Serving to Deploy AI

“Large firms with information facilities can undertake new applied sciences shortly and deploy them sooner, however most human beings are in small and medium companies that don’t have the assets to purchase, customise, and deploy new applied sciences. Something like NIM that may assist them undertake new know-how and deploy it extra simply will likely be a profit to them,” defined Shane Rau, a semiconductor analyst with IDC, a worldwide market analysis firm.

“With NIM, you’ll discover fashions particular to what you wish to do,” he instructed TechNewsWorld. “Not everybody desires to do AI generally. They wish to do AI that’s particularly related to their firm or enterprise.”

Whereas NIM isn’t as thrilling as the newest {hardware} designs, O’Donnell famous that it’s considerably extra essential in the long term for a number of causes.

“First,” he wrote, “it’s speculated to make it sooner and extra environment friendly for firms to maneuver from GenAI experiments and POCs (proof of ideas) into real-world manufacturing. There merely aren’t sufficient information scientists and GenAI programming consultants to go round, so many firms who’ve been wanting to deploy GenAI have been restricted by technical challenges. In consequence, it’s nice to see Nvidia serving to ease this course of.”

“Second,” he continued, “these new microservices enable for the creation of a complete new income stream and enterprise technique for Nvidia as a result of they are often licensed on a per GPU/per hour foundation (in addition to different variations). This might show to be an essential, long-lasting, and extra diversified technique of producing revenue for Nvidia, so although it’s early days, that is going to be essential to look at.”

Entrenched Chief

Rau predicted that Nvidia will stay entrenched because the AI processing platform of alternative for the foreseeable future. “However opponents like AMD and Intel will be capable of take modest parts of the GPU market,” he mentioned. And since there are totally different chips you should utilize for AI — microprocessors, FPGAs, and ASICs — these competing applied sciences will likely be competing for market share and rising.”

“There are only a few threats to Nvidia’s dominance on this market,” added Abdullah Anwer Ahmed, founding father of Serene Information Ops, an information administration firm in San Francisco.

“On prime of their superior {hardware}, their software program resolution CUDA has been the muse of the underlying AI segments for over a decade,” he instructed TechNewsWorld.

“The primary menace is that Amazon, Google, and Microsoft/OpenAI are engaged on constructing their very own chips optimized round these fashions,” he continued. “Google already has their ‘TPU’ chip in manufacturing. Amazon and OpenAI have hinted at comparable initiatives.”

“In any case, constructing one’s personal GPUs is an choice solely accessible to absolutely the largest firms,” he added. “Many of the LLM business will proceed to purchase Nvidia GPUs.”